Table of contents

Kubernetes networking allows Kubernetes components to communicate with each other and with other applications.The Kubernetes platform is different from other networking platforms because it is based on a flat network structure that eliminates the need to map host ports to container ports. The Kubernetes platform provides a way to run distributed systems, sharing machines between applications without dynamically allocated ports.

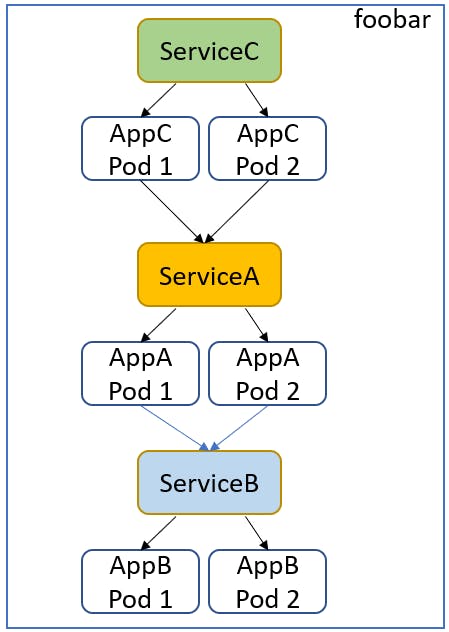

Kubernetes Services

A Kubernetes service is a logical abstraction for a deployed group of pods in a cluster (which all perform the same function).A service is an entity that represents a set of pods running an application or functional component

The need for services arises from the fact that pods in Kubernetes are short-lived and can be replaced at any time. Kubernetes guarantees the availability of a given pod and replica, but not the liveness of individual pods. This means that pods that need to communicate with another pod cannot rely on the IP address of the underlying single pod. Instead, they connect to the service, which relays them to a relevant, currently-running pod.

There are four types of services that Kubernetes supports: ClusterIP, NodePort, LoadBalancer, and ExternalName.

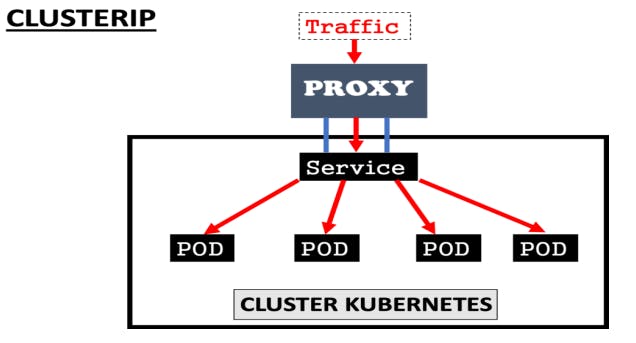

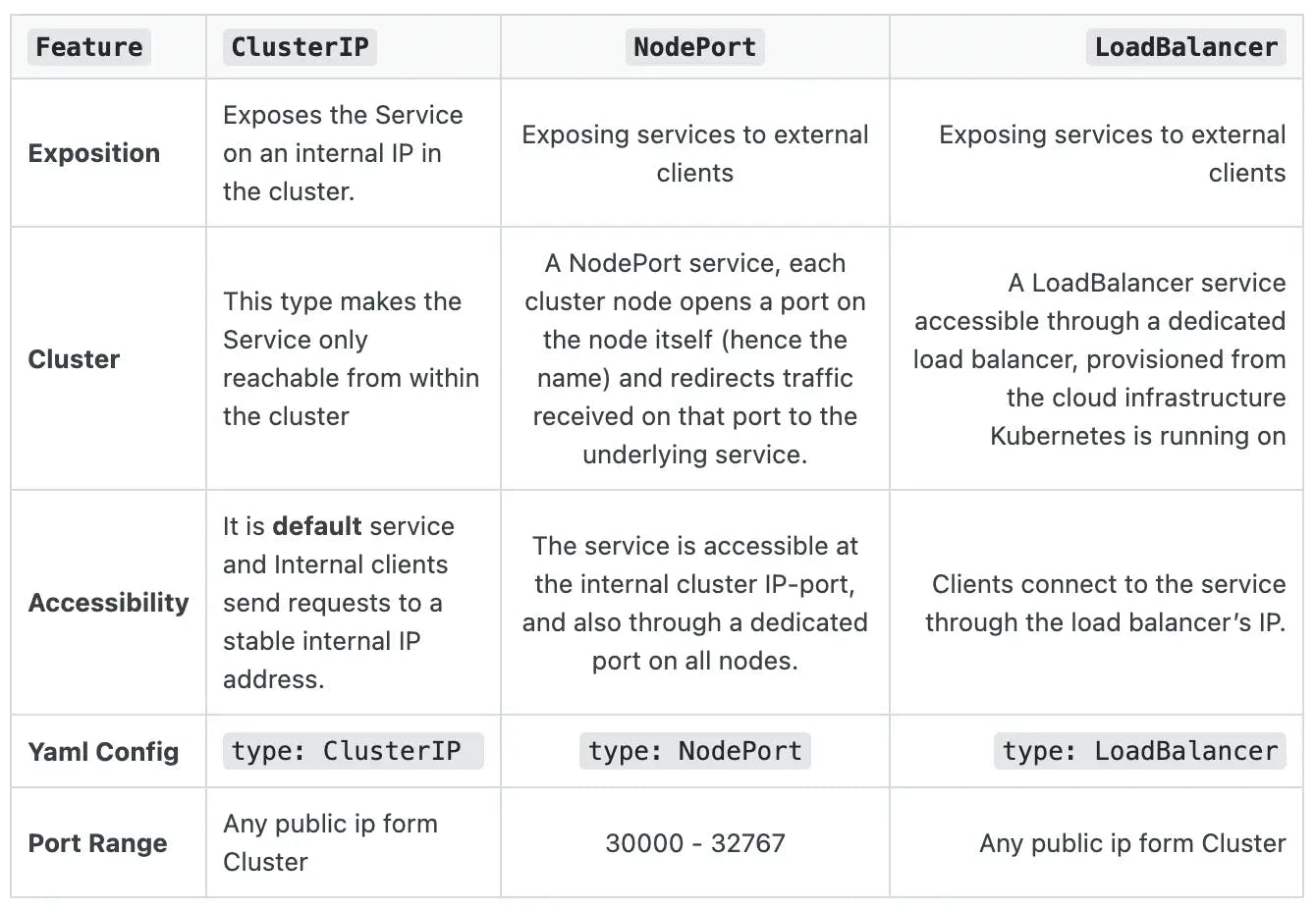

Cluster IP

ClusterIP is the default service that enables the communication of multiple pods within the cluster

By default, your service will be exposed on a ClusterIP if you don't manually define it

ClusterIP can’t be accessed from the outside world

his service type is used for internal networking between your workloads, while debugging your services, displaying internal dashboards

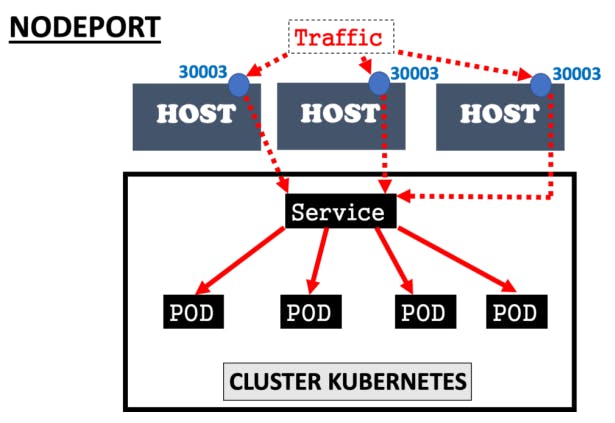

NodePort

A NodePort is the simplest networking type of all. It requires no configuration, and it simply routes traffic on a random port on the host to a random port on the container. This is suitable for most cases

A NodePort publicly exposes a service on a fixed port number. It lets you access the service from outside your cluster. You’ll need to use the cluster’s IP address and the NodePort number—e.g. 123.123.123.123:30000.

Creating a NodePort will open that port on every node in your cluster. Kubernetes will automatically route port traffic to the service it’s linked to.

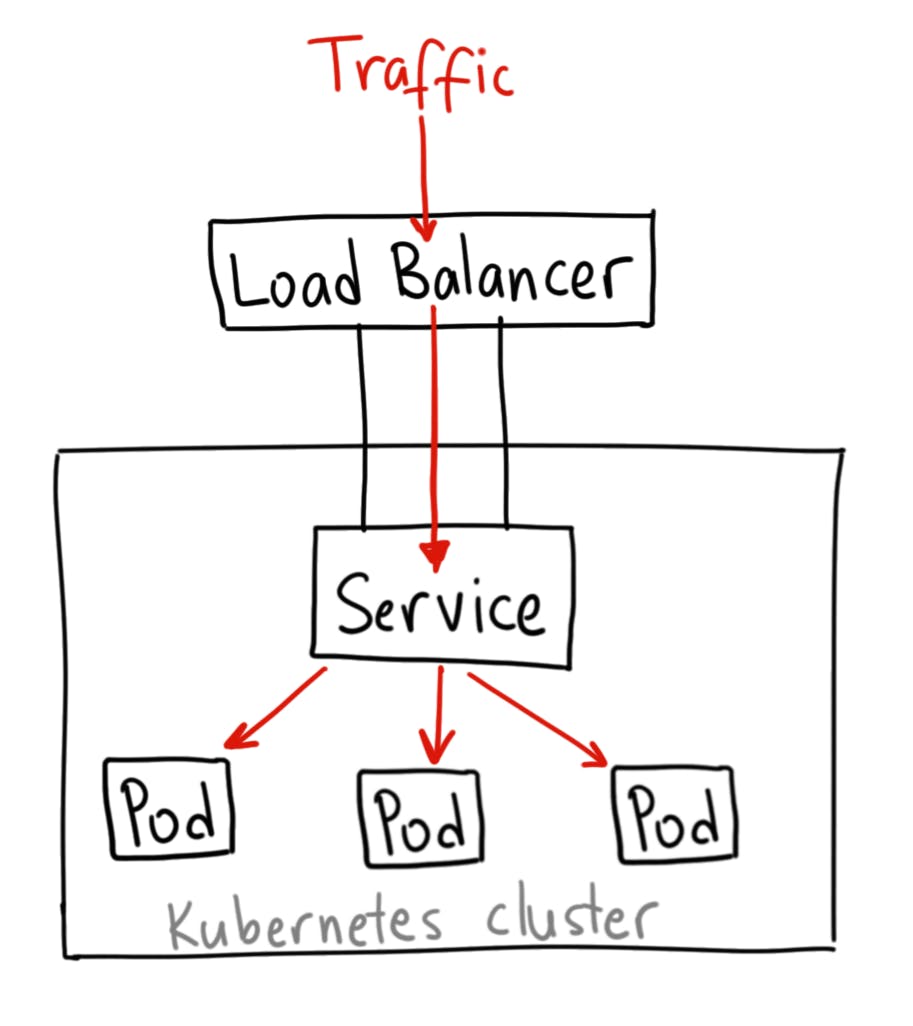

LoadBalancer

LoadBalancer service is an extension of NodePort service. NodePort and ClusterIP Services, to which the external load balancer routes, are automatically created.

It integrates NodePort with cloud-based load balancers.

It exposes the Service externally using a cloud provider’s load balancer.

Each cloud provider (AWS, Azure, GCP, etc) has its own native load balancer implementation. The cloud provider will create a load balancer, which then automatically routes requests to your Kubernetes Service.

Traffic from the external load balancer is directed at the backend Pods. The cloud provider decides how it is load balanced.

The actual creation of the load balancer happens asynchronously.

Every time you want to expose a service to the outside world, you have to create a new LoadBalancer and get an IP address.

ExternalName

Services of type ExternalName map a Service to a DNS name, not to a typical selector such as my-service.

You specify these Services with the

spec.externalNameparameter.It maps the Service to the contents of the externalName field (e.g. foo.bar.example.com), by returning a CNAME record with its value.

No proxying of any kind is established.

Service Types Comparison: ClusterIP vs NodePort vs LoadBalancer

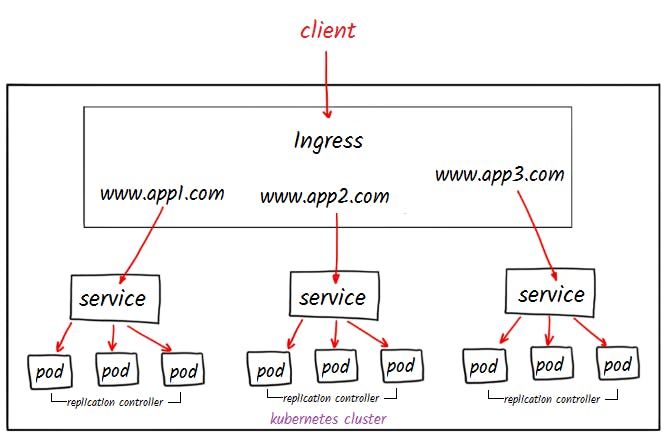

Ingress

An Ingress is an object that allows access to Kubernetes services from outside the Kubernetes cluster.

You can configure access by creating a collection of rules that define which inbound connections reach which services.

An Ingress can be configured to give Services externally-reachable URLs, load balance traffic, terminate SSL/TLS, and offer name-based virtual hosting.

An Ingress controller is responsible for fulfilling the Ingress, usually with a load balancer.

Ingress provides routing rules to manage external users’ access to the services in a Kubernetes cluster, typically via HTTPS/HTTP.

Note - Ingress is not a Service type, but it acts as the entry point for your cluster. It lets you consolidate your routing rules into a single resource as it can expose multiple services under the same IP address.

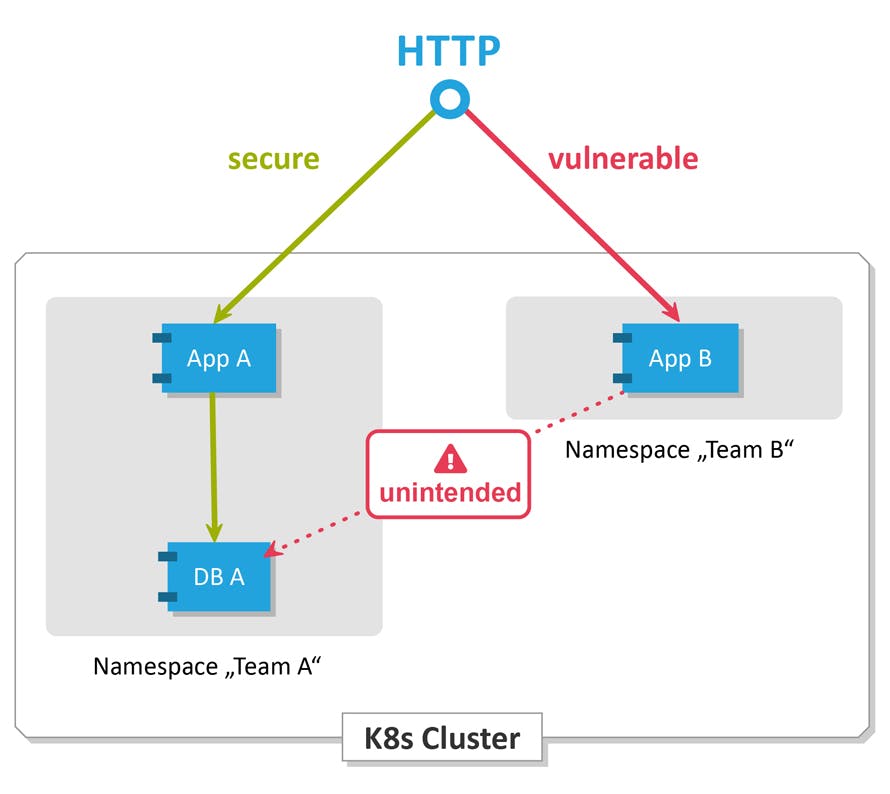

Network Policies

Implementing network policies is critical to operating a Kubernetes environment. Without a configured network policy, all Pods within a cluster can communicate with each other by default. So, when our Pod contains sensitive data, such as a backend database holding confidential information, we must ensure that only certain frontend Pods can connect to it.

Kubernetes network policy lets administrators and developers enforce which network traffic is allowed using rules.

How Does Network Policy Work?

There are unlimited situations where you need to permit or deny traffic from specific or different sources. This is utilized in Kubernetes to indicate how gatherings of pods are permitted to speak with one another and with outside endpoints.

Rules:

Traffic is allowed unless there is a Kubernetes network policy selecting a pod.

Communication is denied if policies are selecting the pod but none of them have any rules allowing it.

Traffic is allowed if there is at least one policy that allows it.

DNS

DNS in Kubernetes is a built-in service that provides name resolution for Kubernetes resources. It allows you to use easy-to-remember names, like "webserver" or "database", to access your applications running in the cluster, instead of having to remember their IP addresses.

CGI Plugins

Container Network Interface (CNI) is a framework for dynamically configuring networking resources. It uses a group of libraries and specifications written in Go. The plugin specification defines an interface for configuring the network, provisioning IP addresses, and maintaining connectivity with multiple hosts.

When used with Kubernetes, CNI can integrate smoothly with the kubelet to enable the use of an overlay or underlay network to automatically configure the network between pods

Thankyou for reading!! many more in a queue

~Nikunj Kishore Tiwari

Great initiative by the #trainwithshubham community. Thank you Shubham Londhe for Guiding Us.